The AI craze is in full swing. New Large Language Models launching one after another, research papers flooding in, your LinkedIn feed exploding, GPUs as scarce as shovels during the Gold Rush, and you still fool yourself that you’ll catch up on all of it. Probably the sooner, the better.

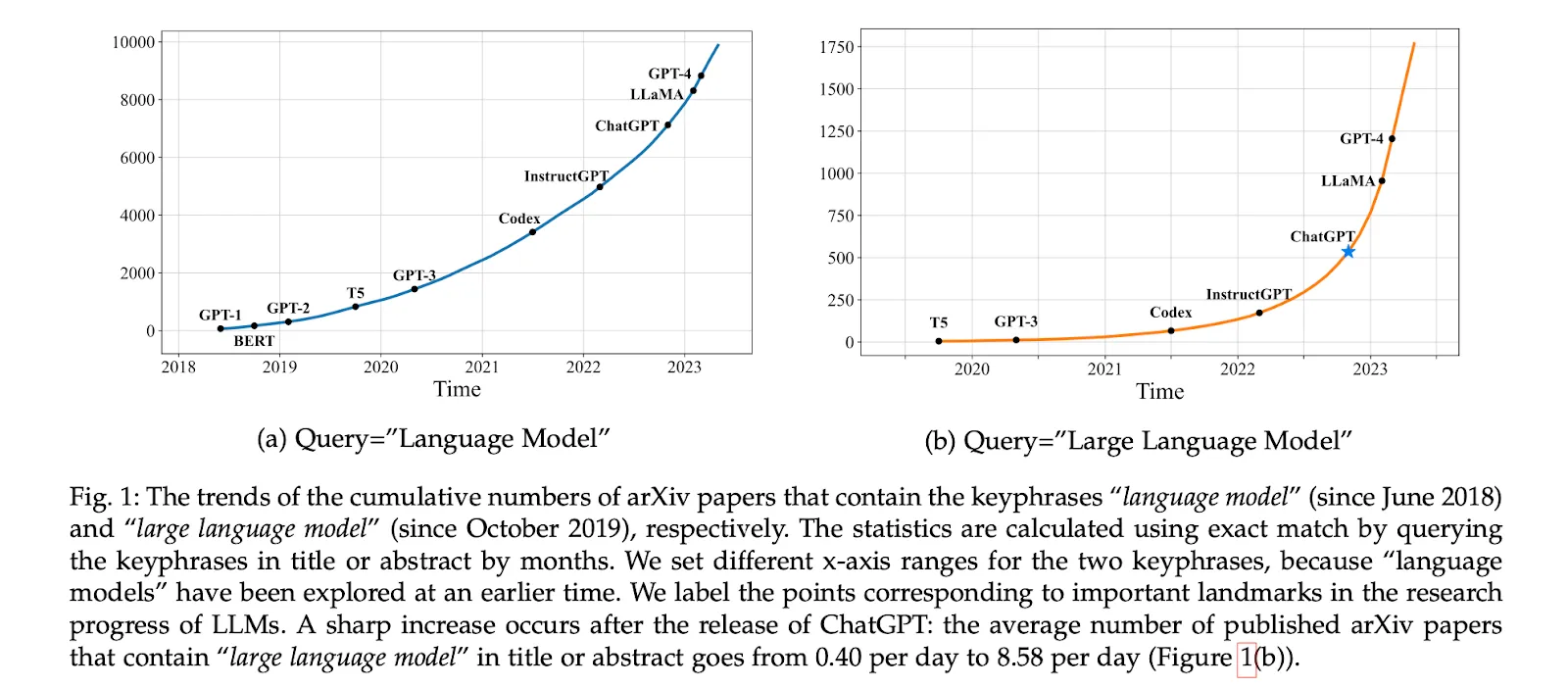

The number of times “Large Language Model” was in the title of a research paper in 2023 increased over 21 times from 0.40 per day to more than 8 since ChatGPT was made public in late 2022.

Source: A Survey of Large Language Models, 2023, WX Zhao et al.

…And I won’t even attempt to guess how many times a “Large Language Model” was mentioned in LinkedIn posts. Or the rumors that ChatGPT died last year.

How we perceive adopting AI in business took a rapid turn from a nice-to- have to an absolute necessity for businesses to integrate to stay afloat. Luckily, implementing AI gets somewhat easier now, with the rise of AIaaS — Artificial Intelligence as a Service.

But, how on Earth did we get here? Let’s talk history for a bit.

Brief history of Artificial Intelligence (as a Service)

As already mentioned, AI is everywhere, and we owe this AI proliferation largely to major developmental milestones being reached in the world of generative AI.

The models got bigger, and they got better (meaning more knowledgeable and capable in general). Instead of being able to expertly learn one specific task, they became “generalists” — and they do it well!

But being a generalist isn’t enough if you want to use it for a specific business use case. Customization has always been around because of that.

Tailoring AI to your business needs

In the good ol’ days __(alright, that’s literally like two years ago), to customize a model for your particular use case — say to build a dog detector — the only option you had was to:

- Take a model that is good at a general task such as recognizing objects in images.

- Gather some data specific to your particular use case (a lot of pictures of dogs)

- Teach the model what a dog looks like.

- Use it, and boom, you have your custom AI with great ROI.

That is the optimistic scenario, at least.

In a more realistic case,the timespan between deciding to experiment with AI in your business and ROI is usually counted in months , with possibly many iterations of the data and algorithm before reaching any reasonable output.

For companies just experimenting with AI, and where AI is not at the epicenter of the product, this was usually too big of a time and money investment to make for something that may or may not end up a source of revenue.

That’s why the number of companies experimenting with AI adoption was substantially lower and just boomed.

What happened?

Well, the FOMO effect, to some extent.

But there’s another, perhaps more important reason. As the models got bigger, they got more knowledgeable and capable in general, and so a brand new learning technique emerged on the horizon: in-context learning.

In-context learning allows for creating customized model behavior using natural language prompts.

You simply tell the AI, in your own words, any relevant information and what you expect it to do with it. Such as “I have these examples of news headlines. Generate 10 similar ones.”

This new technique, in-context learning, turned out to be very successful.

This means a shift from needing to “fine-tune” the model (supplying thousands of highly curated examples to the model and by that changing its internal structure to customize the behavior), to being able to give a natural language description of the desired behavior accompanied by zero to a few examples.

Long story short: You don’t need to “do anything to the model” to get good results for your business anymore.

If you don’t need to “do anything to the model”, then you don’t need to have your own copy of that model.

If you don’t need to have your own copy of a model, and you want to play around with AI because it just got good, you’re probably on the lookout for someone who will host the model for you — provide it as a service. In fact, AI as a Service fills exactly that niche in the market. It makes AI plug-and- playable.

Wait, so what is AIaaS, exactly?

Artificial Intelligence as a Service (AIaaS) means commoditizing AI models and tools and providing access to them as a service.

It allows more businesses to experiment with AI, significantly shortens the path to initial ROI, and removes the need to invest excessive amounts of time or money. But we’ll get to the benefits of AIaaS in a bit.

Basically, any business can now communicate with the service while a third party — an AIaaS provider — takes care of the AI infrastructure.

It’s like hiring an accountant for your company so you don’t have to get into the nitty-gritty of the tax laws yourself. And who’s the accountant?

Well, for example, Together.

AIaaS companies — honorable mentions

Together.ai is one of the AI solution providers that gives Artificial Intelligence its “Linux moment”’ by providing an open ecosystem across compute and foundation models.

Founded in June 2022 by four techpreneurs and computer scientists, just in May 2023 they raised a $20 million seed round and only 6 months later they announced their second fundraise of $102.5 million welcoming top investors on board such as Kleiner Perkins or Nvidia.

Plenty of great companies use their service, like Pika Labs — this company that lets you create these incredible videos from a text prompt.

Of course, it’s only one example — although prominent — of an AIaaS company.

Let’s learn all the types. Examples included.

What are the types and examples of AIaaS companies?

Bots, Chatbots, and Assistants**

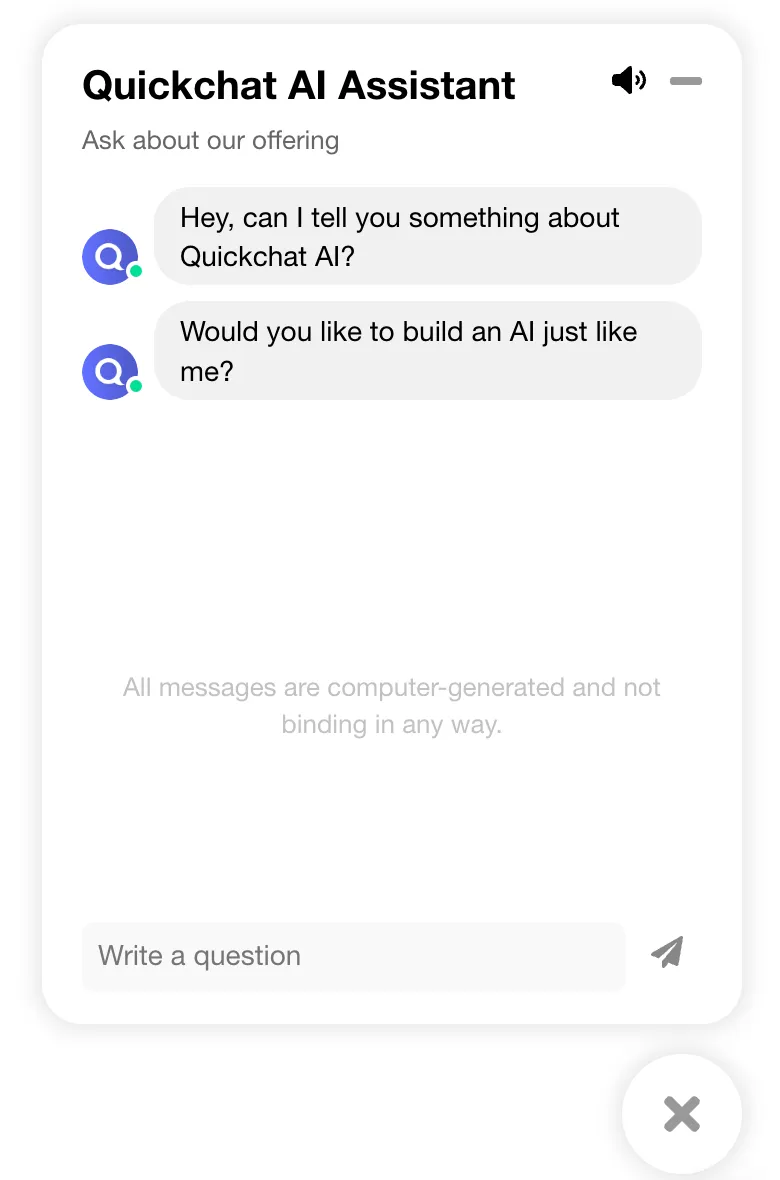

The most common type and the easiest to use for companies without technical expertise in AI.

These companies specialize in providing conversational AI agents, commonly known as AI chatbots or text or voice assistants, to businesses and organizations.

These bots are designed to simulate human-like conversations with users, offering them assistance, answering questions, and performing tasks through an expanding array of modalities (text, voice, images).

In the business world, the most common use case involves customer support and is already widely popular across the web, especially on ecommerce websites, where handling a rising volume of customer inquiries is a real challenge and leads them towards automation solutions based on conversational AI.

You know, these little chats in the bottom right of a website that are sometimes actually useful?

Yeah, they’re probably using artificial intelligence, which is a leap forward from chatbots based on decision trees that use pre-defined paths of conversation flows.

They are capable of not only answering customer service inquiries but also creating support tickets, booking meetings and providing personalized recommendations.

But that’s only the customer support realm.

They may as well perform consumer research projects, surveying thousands of respondents at the same time or acting as an internal expert on companies’ policies and knowledge.

It’s still the beginning, the tech is getting better, and the only thing the list of potential use cases does is grow. With developments like RAG (Retrieval-Augmented Generation), AI chatbots can easily become experts in a particular domain without the hassle of fine- tuning.

Company or product examples: Quickchat AI, ChatGPT (OpenAI), Google Bard, Claude (Anthropic)

Application programming interfaces (APIs)**

That’s the bucket Together falls into.

Application Programming Interfaces (APIs) allow different applications to share information and interact with each other.

In the context of this article, it means that you can leverage API to use an external AI model and weave it into your company’s digital fabric, so there’s no need to build it yourself from scratch.

We can divide these companies into two subgroups differing by the type of models they provide access to:

- Proprietary models

These APIs serve as gateways to highly sophisticated, proprietary artificial intelligence technologies that have been developed, trained, and refined by leading AI research organizations and companies, such as OpenAI or Cohere.

The proprietary nature of these models means they incorporate unique algorithms, vast datasets, and advanced learning techniques that are not available in the public domain.

- Open-source (and selected proprietary models)

Companies such as Together, Anyscale, Amazon Bedrock enable businesses to leverage open- source models through accessible, scalable APIs.

Unlike proprietary models, open-source AI models are developed within a community, allowing for broad collaboration, innovation, and transparency.

These APIs are designed to be developer-friendly, offering straightforward integration paths for adding AI functionalities to applications, services, or systems.

Why someone may want to use open-source models instead of proprietary ones?

Here are the main factors:

-

Cost: Access to proprietary APIs often comes at a premium. The cost can be prohibitive for startups or smaller businesses, especially when usage scales up.

-

Less Flexibility: Users are generally limited to the functionalities and data sets provided by the API, with little room for customization or modification of the underlying model.

-

Dependency: Relying on a proprietary API can lead to vendor lock-in, where businesses become dependent on a specific provider for updates, improvements, and continued access to the service.

-

Community support: Open-source projects benefit from the support of a large community of developers and researchers, which can aid in troubleshooting or with whom we can exchange knowledge.

The use cases for APIs are vast, as they depend on the model.

They include developing custom conversational AI solutions, conducting image and speech recognition tasks, performing data analysis and predictive modeling.

These APIs can also support more technical and specialized applications, such as scientific research, autonomous vehicle development, and real-time analytics.

Company or product example: OpenAI, Cohere, Anthropic, Together, Anyscale, Amazon Bedrock

No-code/Low-code machine learning services**

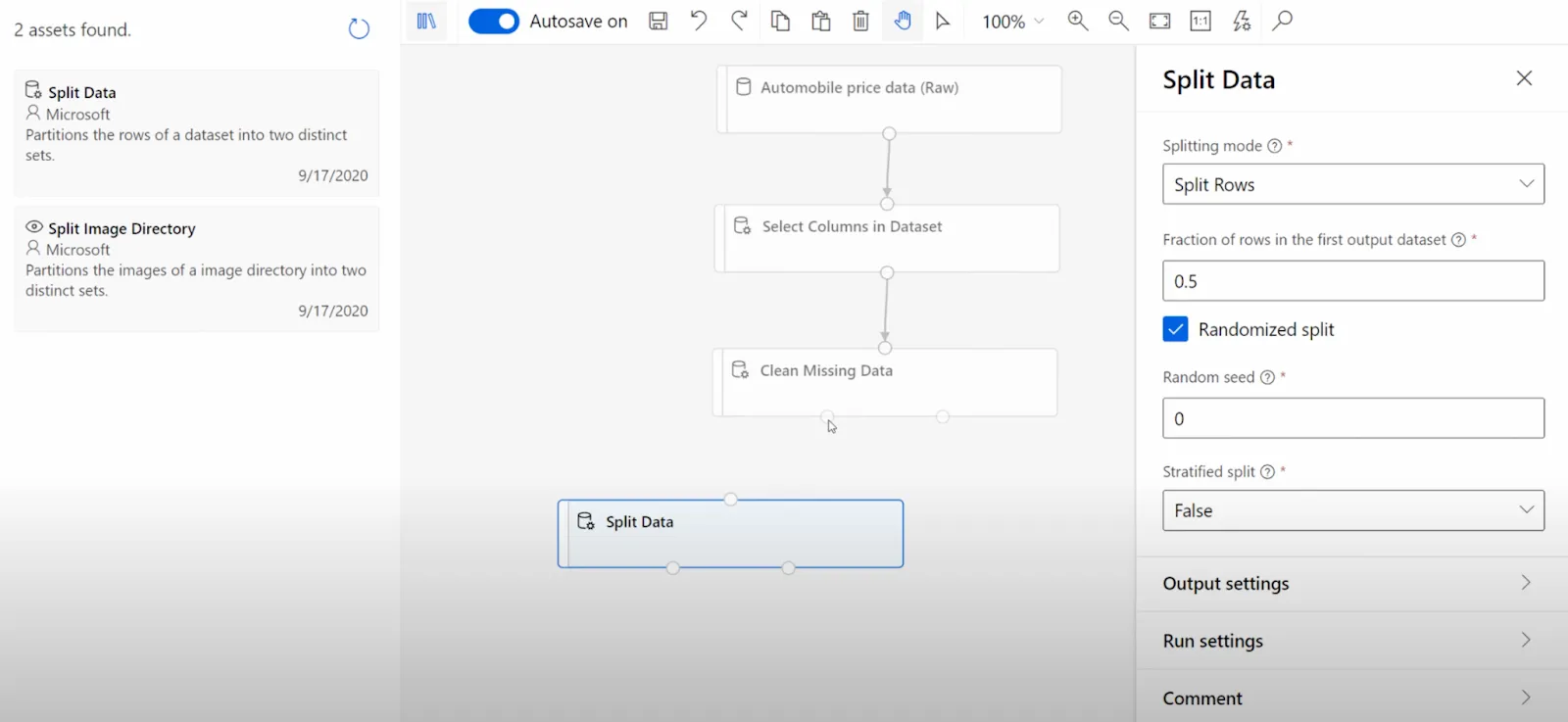

No-code/Low-code machine learning services are designed to simplify the process of developing, training, and deploying machine learning models without requiring deep programming knowledge or expertise in machine learning algorithms.

They provide intuitive user interfaces, drag-and-drop elements, and pre-built templates that enable users to create complex AI solutions through user- friendly processes.

It looks like this:

Microsoft Azure Machine Learning Studio interface

And may evoke association with other visual builder products.

As these platforms evolve, they continue to lower the barriers to entry for machine learning, accelerating the pace of AI innovation across industries.

Company or product example: Microsoft Azure ML Studio, Google Cloud AutoML, AWS Sagemaker

Great, but what are the benefits of using AI technology platforms as a service?

We covered some of them in the previous section, but in general, companies offering AIaaS solutions tackle the following problems:

- The lack of flexibility and vendor lock-in.

In contrast to building and maintaining your own AI infrastructure, AIaaS allows you to switch fast between vendors and models and choose the best options for your business.

These APIs provide payment options “as-you-go” and very easy integration so you can try multiple options with minimal effort.

And then you don’t have to put all your eggs in one AI basket and build for example effective fallback chains that guarantee service continuity to your product.

- Access to new models.

New stuff comes out every day and that way you have all the access to it. AIaaS companies like Together deploy models the moment they become available online, so you can put your hands on them right away.

- Cost efficiency.

It’s much cheaper to use an API than to host the model yourself, especially when you don’t have millions of requests per day.

Not convinced? Let’s do some math.

The ChatGPT API is priced by usage. When using ChatGPT-3.5, it costs 0.002$ for 1K tokens, which are parts of words used by LLMs for processing.

A standard page of text contains about 500 words, which can be converted to roughly 667 tokens. Given the current cost per token, generating an output of 1000 pages of text in our example would cost you $1.3.

Which is quite accessible, even for small businesses.

If you decide to host a Large Language Model yourself, you’ll pay hosting and hourly costs to a cloud service provider like AWS, which, according to their pricing would amount to $20,000.

We’ll get back to that point in a moment to show how “cost efficiency” can become a “cost inefficiency” and therefore a disadvantage for some. But for now, let’s move on to the fourth aspect:

- Ease of integration and robustness.

To use a model you host, you’d have to build an API yourself anyway and maintain the model. AIaaS APIs are designed to be easily integrated into existing applications and systems. This can save you time and resources compared to developing and maintaining your own AI models.

- Scalability and speed.

AIaaS companies provide API access to AI models, and they focus solely on that. Their main goal is to make their products as scalable and as fast as possible to attract the most traffic.

Solving people’s problems can bring you money and it indeed brought some in the case of AI as a Service companies like Together.

So, how do they make it?

They host open-source models on their own GPU clusters, which means they don’t incur the cost of developing the models themselves, and generate revenue by hosting them on their own clusters and letting people use them.

Sounds like a dream. You may rightfully ask now:

Are there any drawbacks to using Artificial Intelligence as a Service?

What is an advantage can sometimes also be a disadvantage like…

- Dependency.

You don’t build it yourself, so you need to rely on an external AI service, which means that your application’s performance is dependent on the availability and reliability of the AIaaS solution provider. If the AI service experiences downtime or disruptions, it may impact your application.

- Data privacy.

When using an AIaaS API, your data is processed externally, which may raise concerns about data privacy and security.

And yeah, data privacy is a huge topic right now in AI. The process of training LLMs is controversial in itself:

But you must be double cautious if you work in sensitive industries like financial or medical services and process your customers’ data.

- Customization (or lack thereof).

While AIaaS APIs offer pre-trained models for various tasks, they may not perfectly align with your specific requirements. If your use case demands highly customized models (e.g. you edit architectures to build something), building and training your models may be a better option.

- Long-term costs.

Again, the pay-as-you-go pricing model may be very cost-efficient for many, probably most businesses, but if you have A LOT of traffic, it may cost you more.

I mentioned how using AIaaS companies like Together may be cost-efficient for you and what would be the cost of generating a page a day.

Let’s now assume you’re needs are much bigger than this and you generate 1 million pages a day. It quickly rises to 1,300$ a day, which means by the end of the year, you’ll pay almost half a million dollars, which is quite inaccessible for most businesses and cost-inefficient. In such cases, hosting the model yourself on a cloud instance will be significantly cheaper.

Is AIaaS the right choice for your business?

AI is still in the infancy of its adoption and technology remains expensive. But historically, technology doesn’t stay that way and we can already see it has become cheaper ( in terms of money, and also computationally) to train and use models.

As AI matures we might experience that the important aspects of it (such as privacy, customization, and no dependency) just outweigh the benefits of AIaaS.

On the other hand, as AI becomes omnipresent in businesses, we might experience AIaaS becoming the conventional way to use AI, letting dedicated companies do the “dirty work” for us while we focus on our specific use case.

I’ll refrain from making predictions because it’s too much of a gamble. But it definitely shouldn’t withhold you from starting experimenting with AI. AIaaS vendors are great for it and significantly lower the barrier of entry.

Take advantage of that and go exploring.

And if you are already thinking about fine-tuning your own models, check if it won’t be overkill for you by reading RAG vs Fine-tuning for your business? Here’s what you need to know .