“Waves crash, tails wagging,

Salty breeze, joyful barking,

Sea meets loyal friend.”

“Leonardo da Vinci painted the Mona Lisa in 1815”.

What do this haiku and this trivia about an Italian painter have in common? They’re both generated by ChatGPT and they’re both excellent AI hallucinations examples.

What sets them apart is that one is a lovely haiku about dogs and the sea, and the other is a straight-up lie.

But let’s not get ahead of ourselves just yet.

What are AI hallucinations?

The concept of AI hallucination gained prominence during the current AI boom and popularization of Large Language Models (LLMs) such as ChatGPT.

AI hallucinations, in the context of generative models, are plausibly sounding falsehoods such as the year in which Leonardo da Vinci supposedly painted Mona Lisa (it was in the 1500s btw).

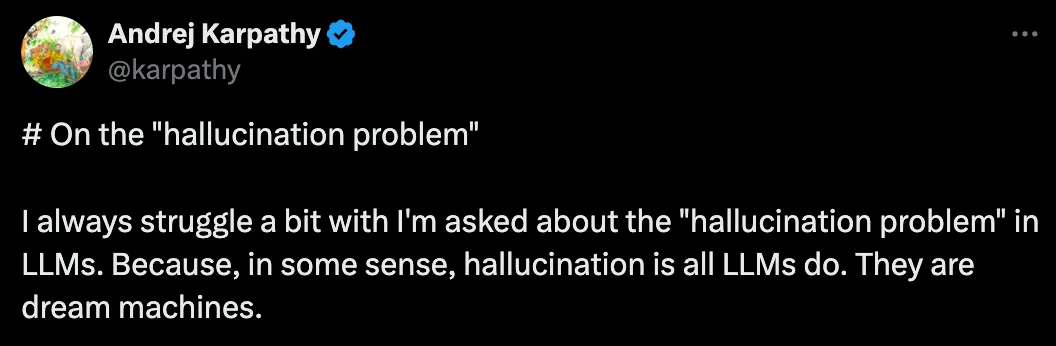

As Andrej Karpathy said: “In some sense, hallucination is all LLMs do. They are dream machines.”

Read the full Tweet at https://twitter.com/karpathy/status/1733299213503787018

That’s why in both cases we observed an “LLM hallucination”. The second case, however, is what we’d nowadays deem an AI hallucination, as the response diverged from the user’s expectations.

Why AI chatbots hallucinate, then?

Generative AI models (LLMs included) have no real intelligence — they learn how likely the next piece of data is to occur based on patterns they pick up from an enormous number of examples, usually sourced from the public web.

In other words, they’re statistical systems that expertly predict words, images, speech, music, or whatever modality you feed to the model.

When you think about it, every generation is a hallucination. AI hallucination is LLM’s only feature… and the only bug.

This is a classic “glass is half full, or half empty” problem in the sense that whether we admire or condemn AI hallucinations depends on what we expect the model to do. Consider the following:

- The more “creative” the output we prompt models to generate, the less we perceive it as an AI hallucination and are impressed by its creativity, intelligence, wit, or humor.

- On the other hand, the more “factual” and grounded in real-world information the output, the easier it is for us to spot an AI hallucination, as we have the ground truth to compare the answer against.

But what causes AI hallucinations?

Contrary to people, Large Language Models have no way to discern what is a hallucination and what is supported by facts.

Unlike people, they don’t have a dynamic world model to fact-check the response, nor do they have senses that could help them discern truth from false.

At the same time, they are trained always to produce output, so if they lack context, they will fill in the gaps in information based on their learned patterns — they have to.

Our brains too hallucinate. If we lack context, the brain’s inherent need to make sense of incomplete or ambiguous information fills in the gaps for better or for worse.

And our senses and the logical part of the brain can help us catch the “hallucination”.

Consider song lyrics for instance. You may remember the chorus to Bee Gee’s “Staying Alive” but then getting through the verses will probably cause you some trouble, resulting in improvising them to get to the chorus again.

Generative models’ hallucinations are a similar thing. When you realize you don’t know the lyrics, you can look them up online and thus refresh your memory.

Inherently, LLMs don’t have that kind of introspection and can’t estimate their confidence in the prediction. When prompted, they produce the requested output in one go and forget about it.

However, like people, if given the chance these models can reason and reflect upon their answers to make them more accurate or correct them if necessary.

How to prevent AI hallucinations then? (the unwanted ones of course)

Researchers continuously propose prominent prompt engineering approaches such as Chain-of-Thought prompting, where we break down a problem into smaller subproblems to reduce the complexity and strengthen accurate, complex reasoning to make responses faithful and reduce AI hallucinations.

After all, the hallucinative nature of the generative models is what allows them to “become” a skilled therapist, front desk manager, data analyst, or any other persona we assign to them via prompts to improve their performance on the task we give them.

This makes LLMs super versatile and widely usable.

This, combined with providing the model external knowledge as context to ground its responses in real-world information makes for a powerful system that harnesses the power of generative AI and channels it to your advantage.

A prime example of this approach is the use of Retrieval Augmented Generation (RAG) systems, the backbone of LLM-powered chatbots. These help us not to rely only on Large Language Models for correct information, but create a knowledge base that the model can pull accurate answers from.

This way, we can still make the model act like a data analyst, customer support representative, or therapist, changing how it replies based on the role.

But, we also ensure it has the right facts to use in its answers.

LLMs as part of larger systems

Perhaps LLMs nowadays are still more like parrots who learned to repeat words rather than sentient beings who have conscious control over what they say (which is not to say parrots aren’t sentient).

Just as our brains are parts of our organisms, perhaps we should see generative models similarly. They should fit into bigger systems where we harness their creative abilities but also make sure they stick to the facts.

The human mind thrives on the edge of chaos and order, and so the potential of AI lies in its ability to navigate the fine line between real-world integrity and imaginative creation.

After all, it’s this very ability that has the power to transform industries, revolutionize art, and even redefine what it means to be human.

Beyond horizon,

Dreams and thoughts merge into one,

New paths unfold, bright.

(This haiku was also generated by ChatGPT.)